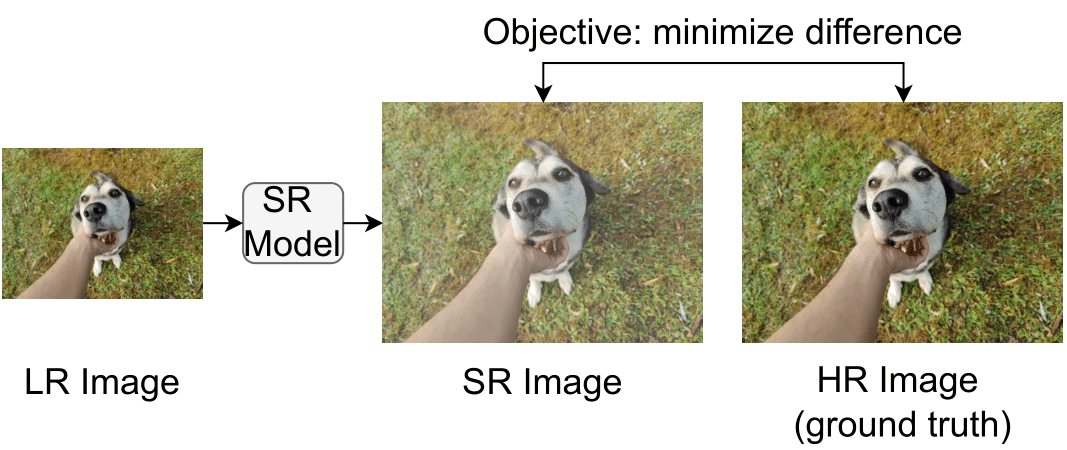

Super-Resolution (SR) is the process of enhancing Low-Resolution (LR) images to High-Resolution (HR). The applications range from natural images to highly advanced satellite, and medical imaging. Despite its long history, SR remains a challenging task in computer vision because it is notoriously ill-posed: several HR images can be valid for any given LR image due to many aspects like brightness and coloring. The fundamental uncertainties in the relation between LR and HR images pose a complex research task. Thanks to rapid advances in Deep Learning (DL), SR has made significant progress in recent years. Unfortunately, entry into this field is overwhelming because of the abundance of publications. It is knotty work to get an overview of the advantages and disadvantages between publications. This OpenAcess work unwinds some of the most representative advances in the crowded field of SR. It discusses common knowledge in image SR, standard procedures, and, most importantly, recent advances and future directions. New researchers will find this work as an easy starting point to get into the topic of image SR and more advanced researchers will get updated on current trends and can use this work as reference for known procedures, metrics and explanations.

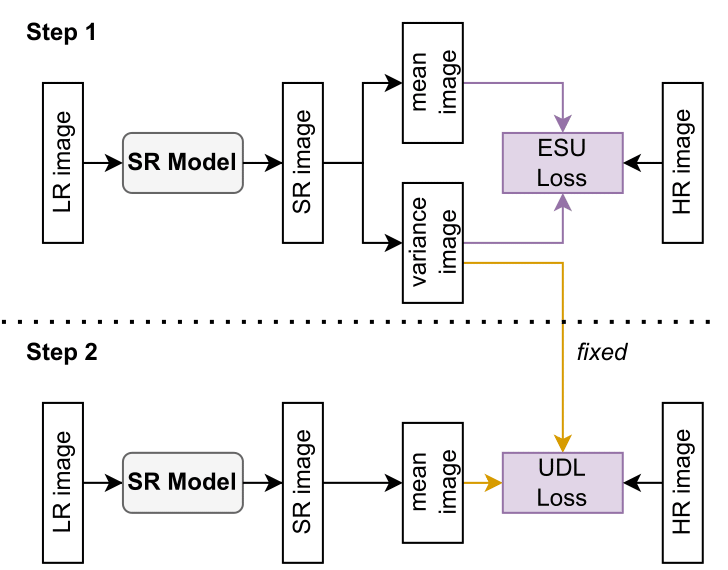

The work commences with an introduction to the basic definitions and terminology. It then proceeds to discuss evaluation metrics for SR solutions. Along the way, we introduce various datasets that provide diverse data types, like 8K resolution images or video sequences. It further delves into a discussion of different learning objectives of Super-Resolution: Regression-based SR (also with uncertainty), Generative SR, and, more recently, Denoising Diffusion Probabilistic Models.

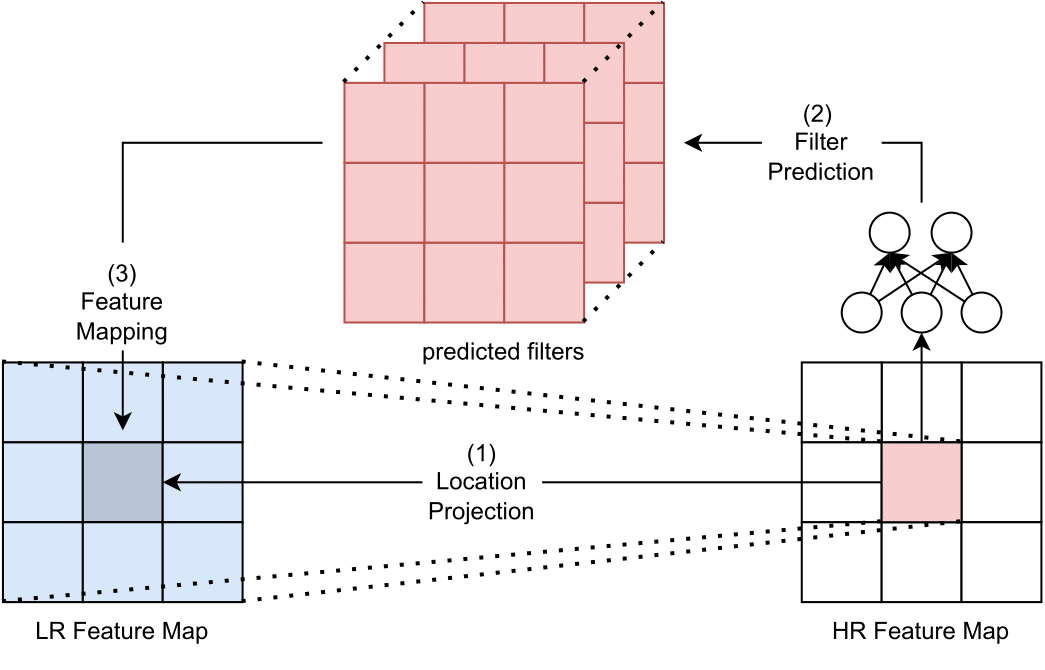

This work also dives into the world of various upsampling methods, a critical aspect of image SR. It covers the whys and hows of interpolation-based and learning-based upsampling techniques and also problems appearing with upsampling techniques (e.g., artifacts) in supplemental material. Also, our work explores the challenges and potential solutions of flexible upsampling for real-world scenarios with arbitrary scaling factors.

Moreover, it surveys additional learning strategies such as curriculum learning, enhanced predictions, learned degradation, network fusion, multi-task learning, and normalization techniques like the Adaptive Deviation Modulator (AdaDM).

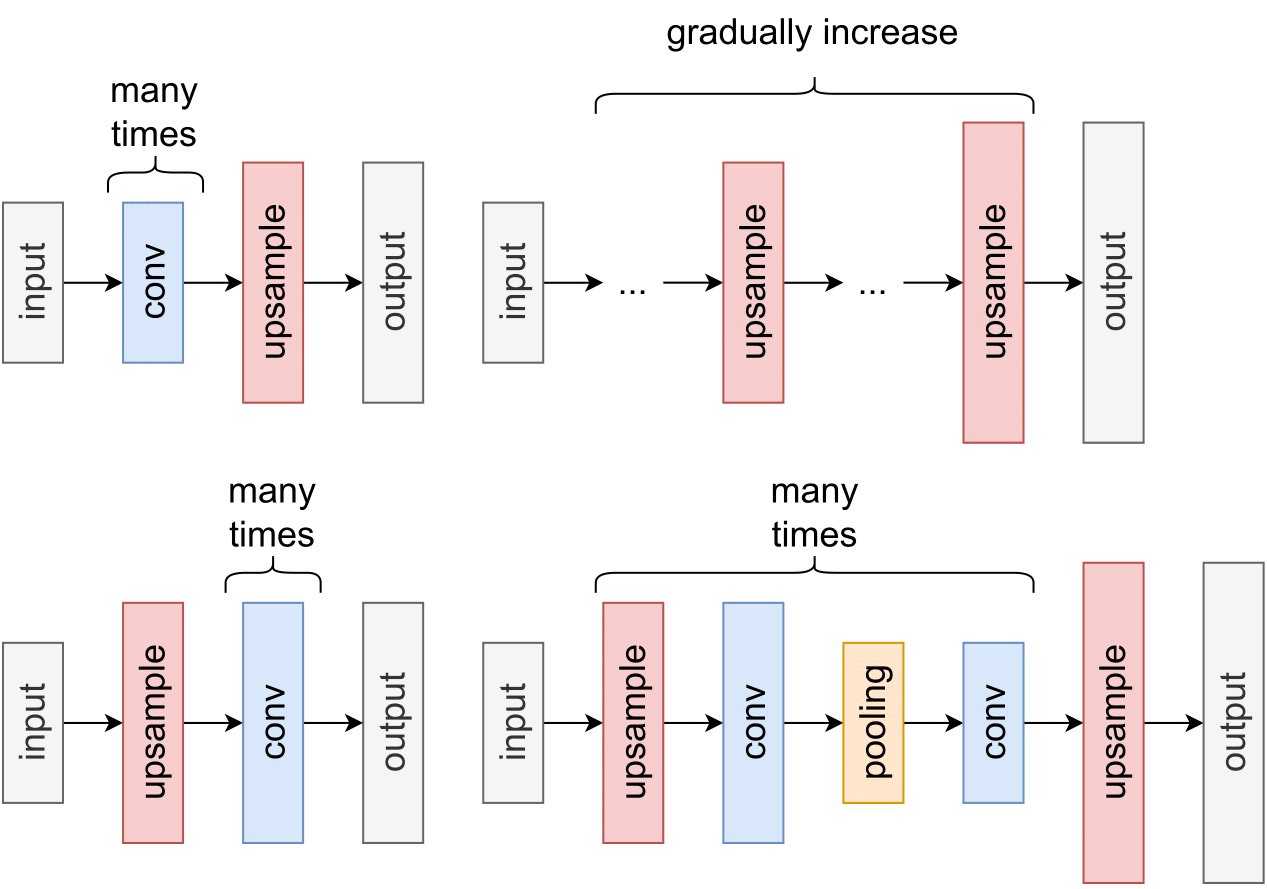

This paper examines in detail different SR models and architectures and provides comprehensive explanations as well as unified visualizations. Architectures are categorized into different branches of image SR, such as: Pre-upsampling, Post-upsampling, Progressive Upsampling, and Iterative Up-and-Down Upsampling.

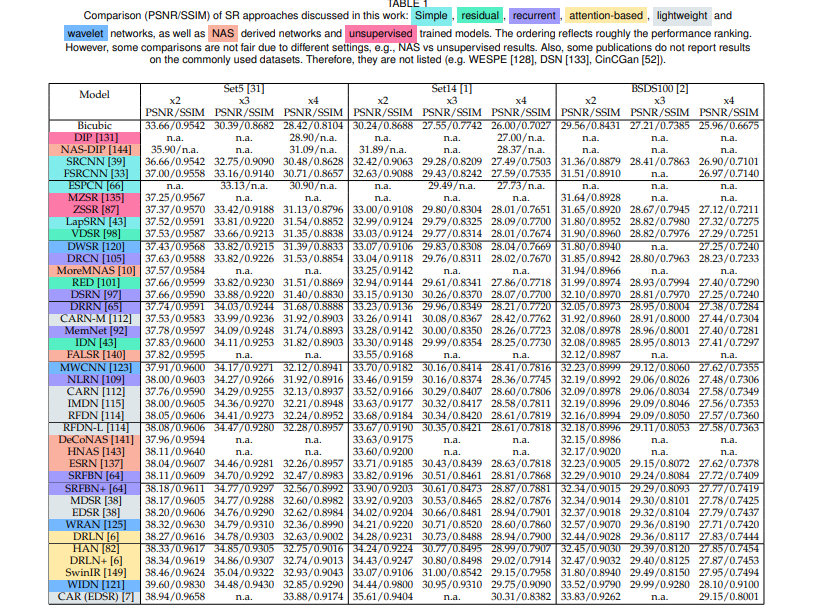

We discuss these primary categories: Simple Networks, Residual Networks, Recurrent-Based Networks, Lightweight and Wavelet-based Models. These various state-of-the-art architectures are examined with their pros and cons. Finally, you will get a scoop of Unsupervised SR and Neural Architecture Search for SR! At last, but not least, we will discuss the future of SR and the challenges that lie ahead. So, gather your party and embark on this thrilling adventure, delving into the diverse and intricate world of deep learning-based super-resolution networks and unlock its secrets! Spoiler-Warning: Big tables included.